This day started as a problematic one: almost the whole day passed when I finally got my admin account for the computer. And that’s when the steamroller started!

When I am in a project, I make sub-units in order to achieve goals and measure progress. I think I have two out of three done. The three sub-units are the following:

1., Eyesight alignment and relaxing stimulus (95% done)

2., Brightness control for the patch (70% done)

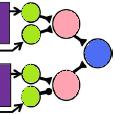

3., Frame alternator

The fist one is practically ready. I am using a randomly organised, static binary starfield, which will give a reasonable overall brightness, and won’t set off the monitor to full blast, which is very unpleasant in a completely dark room.

The second one I struggled with: It seems that Windows and libusb doesn’t really mix: I managed to crash the entire OS (yes, not just matlab itself!) many times. And every crash caused a good 10 minutes downtime to allow booting. So, I re-mapped shade control to the following:

Absolute mouse Y coordinate (with respect to the screen resolution)

Sublte control is done with the left and right mouse keys. Quitting is done by pressing the scroll wheel.

Originally, scroll wheel + derivated mouse Y coordinated would have been the solution.

I am concerned about the performace: Will I have enough ‘horsepower’ to render four patches at 140 frames per second? I will have to check UI at every 4-5 frames only, so I can have some relief there.

Also, by declaring and setting variables before loops, I could save more computational time. We will see.

I have fiddled with the photometer as well: it doesn’t appear to behave how the protocol is described: it regulary sends garbage, which is randomly mixed with data. Not good. Will take a look at the outgoing signal with an oscilloscope. Last time I had this problem with and old kit was due to inadequate smoothing on the power rail. Also the poor thing wasn’t calibrated in two years!