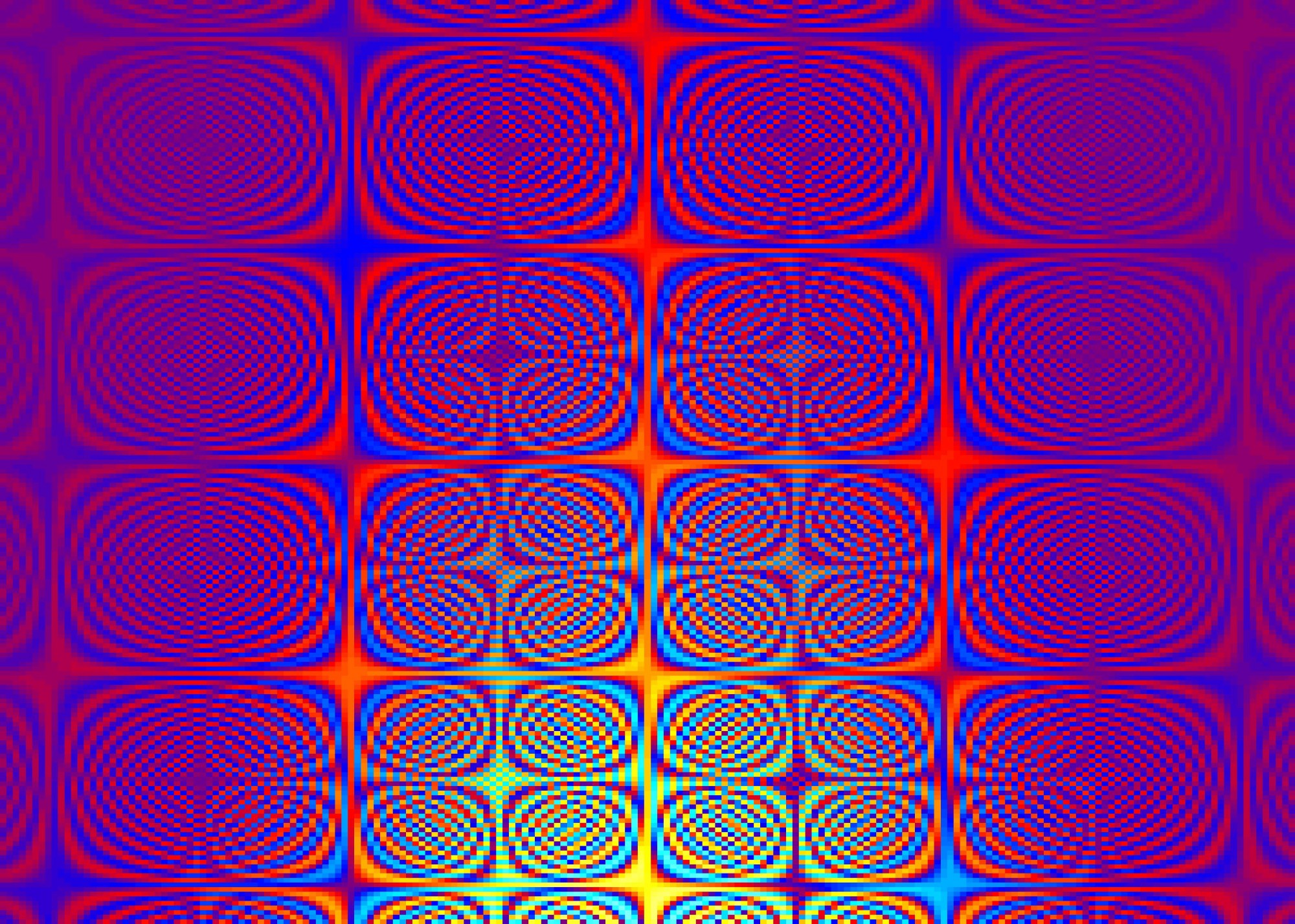

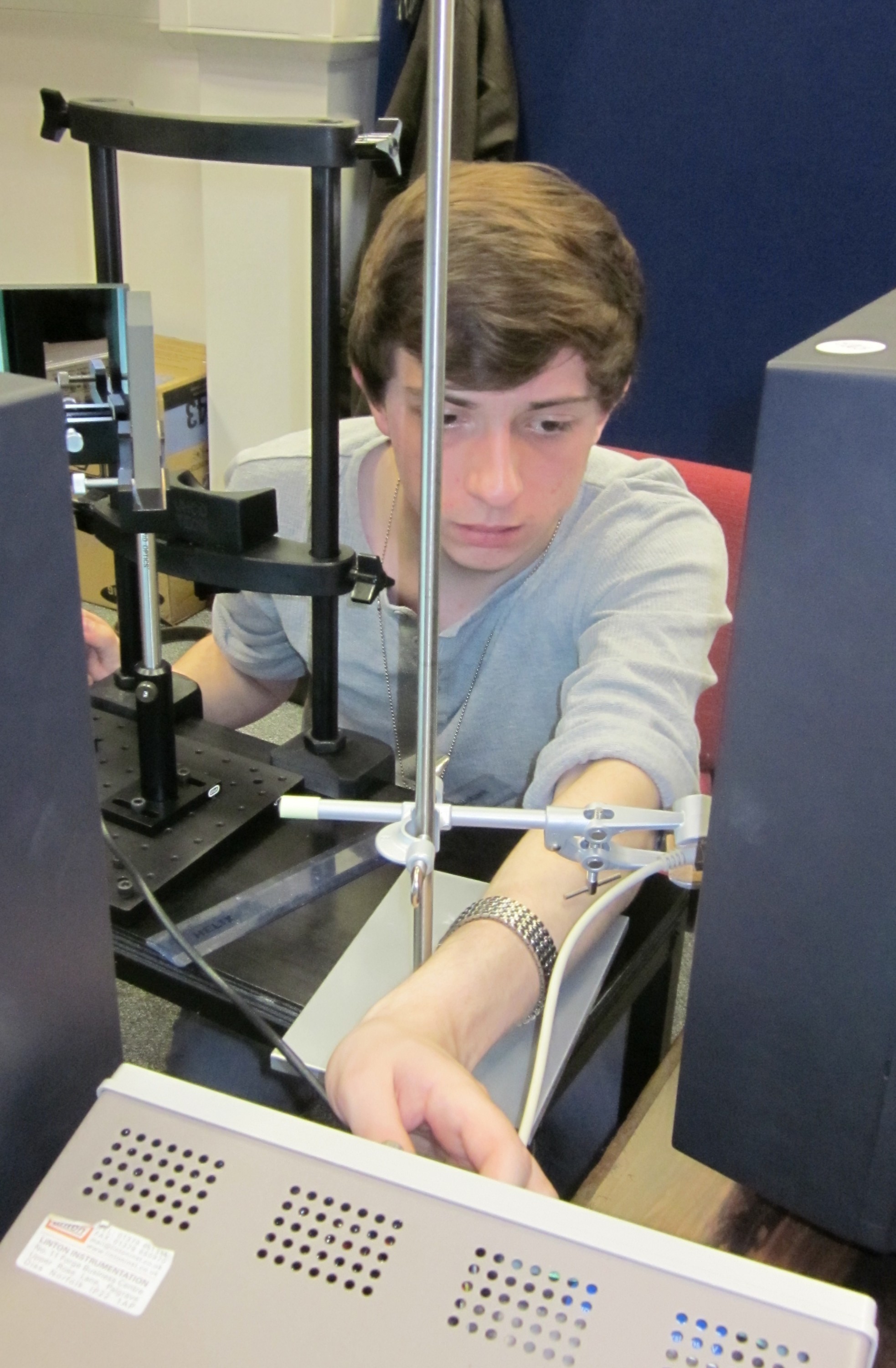

This week has been a very varied and interesting one. I began by sorting out some acetate sheets for my perspex from which I could draw some stimulus, have them represented on the computer, and then check to see if my program was working as I intended it (see below pictures for examples of the pictures, lines and 3D cube used, I also used some random points to see if that also worked and eventually it did, not pictured). The immediate problem I could see was that the pictures were lining up perfectly fine when my angle of rotation was zero, but then if I rotated clockwise the images translated left and anticlockwise right. Because of this I realised that the centre of rotation I had organised (the centre of the television) was not in fact where the origin in my screenspace was. I adjusted the stand accordingly, by moving the television backwards, and it all worked perfectly.

After that I ran through the experiment myself for a full set of data and analysed the results, which show as I suspected that the slight translation made very little difference to the perception of the cube.

I am still working on getting the cubes working in openGL, which is becoming less painful the more I look at it, the cubes now warp and the backs can’t be seen when they shouldn’t. Still a work in progress, watch this space. The advantage I have is that the ‘extra parts’ such as turning it 3D, randomly interleaving many different variables and recording the results should be relatively straightforward as it can just be ported from phase 1.

I helped out at Kids Kabin again this week, (Ann the supervisor came to make up numbers and said my calling in life must eventually involve teaching, quite a nice compliment!) I have begun working on my dissertation with a bit more earnest (reading and re-reading appropriate papers, starting my introduction and materials and methods sections, etc.). I have also signed up to attend a conference on matlab in late June (only a week before my wedding) and have worked on my presentation at a conference in a fortnights time.

Next week I intend to continue with the openGL work, I am thinking of different ways I could present the problem in case what we are trying is physically impossible, possibly an adjustment task where the cube warps depending on the right or left keypresses, and the participant has to do it until they believe the cube is no longer warped, starting at different angles. Think that would be both feasible to program and quite interesting. Will see what Jenny thinks next week.

And I’m delighted to announce that

And I’m delighted to announce that