I recently organised a Training School on 3D Displays and the Human Visual System as part of the European Training Network on Full-Parallax Imaging. The Network aims to train up 15 Early Stage Researchers who not only have expertise both in optics and engineering, but who also have an understanding of human visual perception. This first Training School aimed to equip the researchers, from a variety of disciplinary backgrounds but mainly engineering, with the relevant knowledge of the human visual system.

As part of this, I gave three lectures. I’m sharing the videos and slides here in case these lectures are useful more widely. Drop me a comment below to give me any feedback!

Lecture 1: The eye.

Basic anatomy / physiology of the eye. Photoreceptors, retina, pupil, , lens, cornea. Rods, cones, colour perception. Dynamic range, cone adaptation. Slides are here.

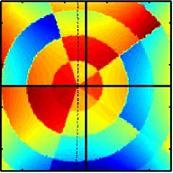

Lecture 2: The human contrast sensitivity function.

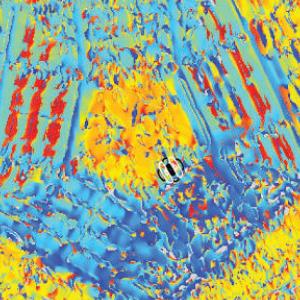

Disparity, stereopsis. Stereoacuity and stereoblindness. Binocular vision disorders: amblyopia, strabismus. The correspondence problem. Issues with mobile eyes; epipolar lines, search zones. Different forms of human stereopsis: fine/coarse, contour vs RDS. Slides are here.

Lecture 3: Stereopsis.

Spatial and temporal frequency. Difference between band-pass luminance CSF and low-pass chromatic CSF; applications to encoding of colour. Fourier spectra of images. Models of the CSF. Slides are here.