Our energetic team submitted a number of entries to the Royal Society’s Picturing Science competition.

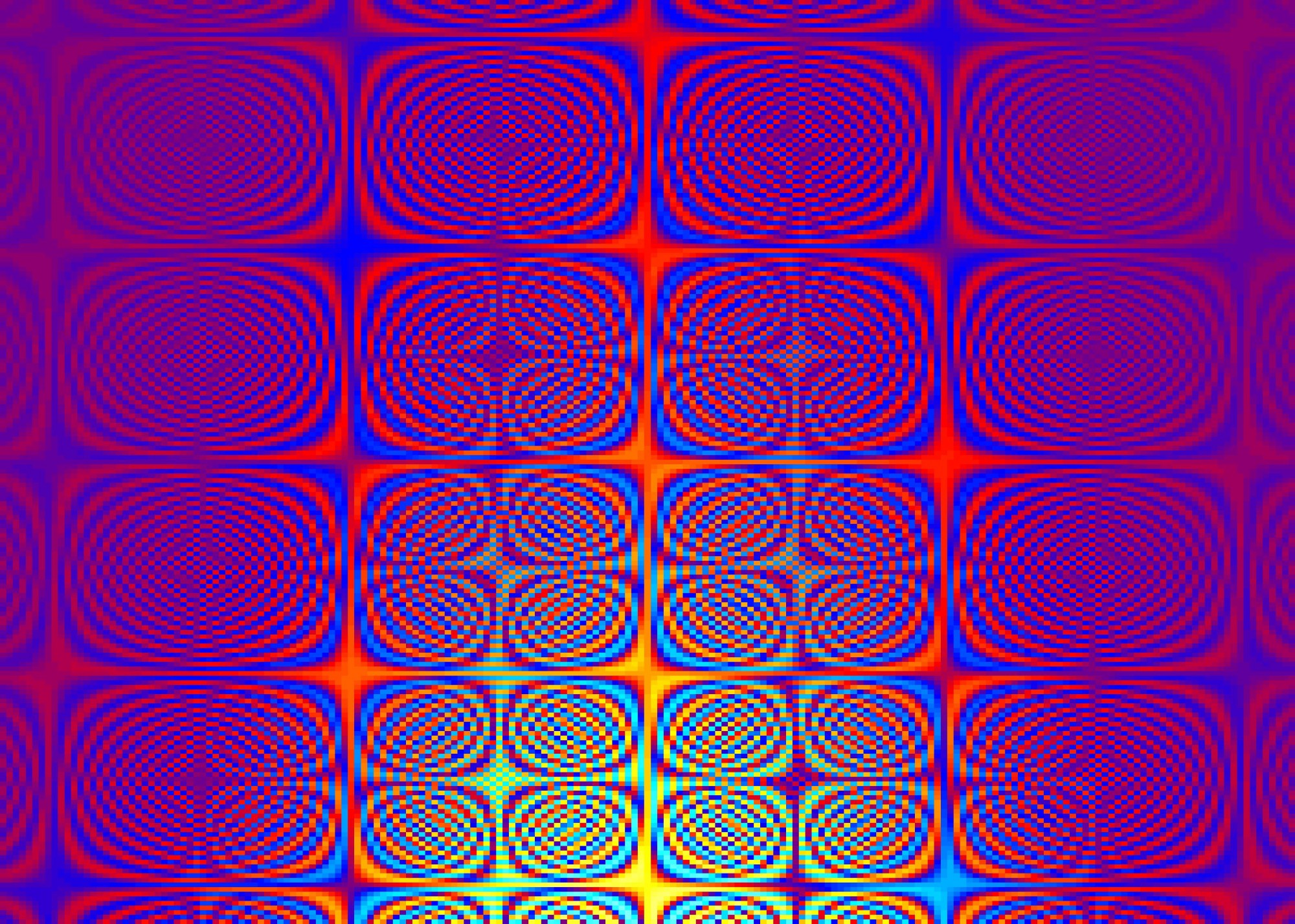

Jenny: This is an image I created in the scientific programming environment Matlab while doing some maths, trying to understand a particular aspect of stereo ("3D") vision. The funny thing is that I can now no longer even remember how I generated it! I think it is probably a Fourier phase spectrum of some sort. I threw up this image in my work, saved the figure, and carried on. Much later I came back to it and was struck by how beautiful and complex it is. In its repeating cells of interlocking curves, it reminds me of Celtic knotwork like that found in the Lindisfarne gospels.

Jenny: A occupational hazard of being a scientist's daughter is being roped into experiments. For this project, we were interested in where children looked when making judgments about pictures. We developed a system where we displayed pictures to children on a computer touchscreen; the children's eye-movements as they scanned the picture were monitored by an eye-tracker in front on them, and the children then touched the screen to indicate their judgment. We had the children sit on a carseat so that their head stayed in roughly the same position and the eyetracker didn't lose sight of their eyes. An adjustable arm helped us position the touchscreen at the right distance for each child, while the company Tracksys kindly loaned us an eyetracker. My little girl helped us get it all working and patiently recorded the words we needed for the experiment, so the computer could "speak" in a nice, friendly child's voice.

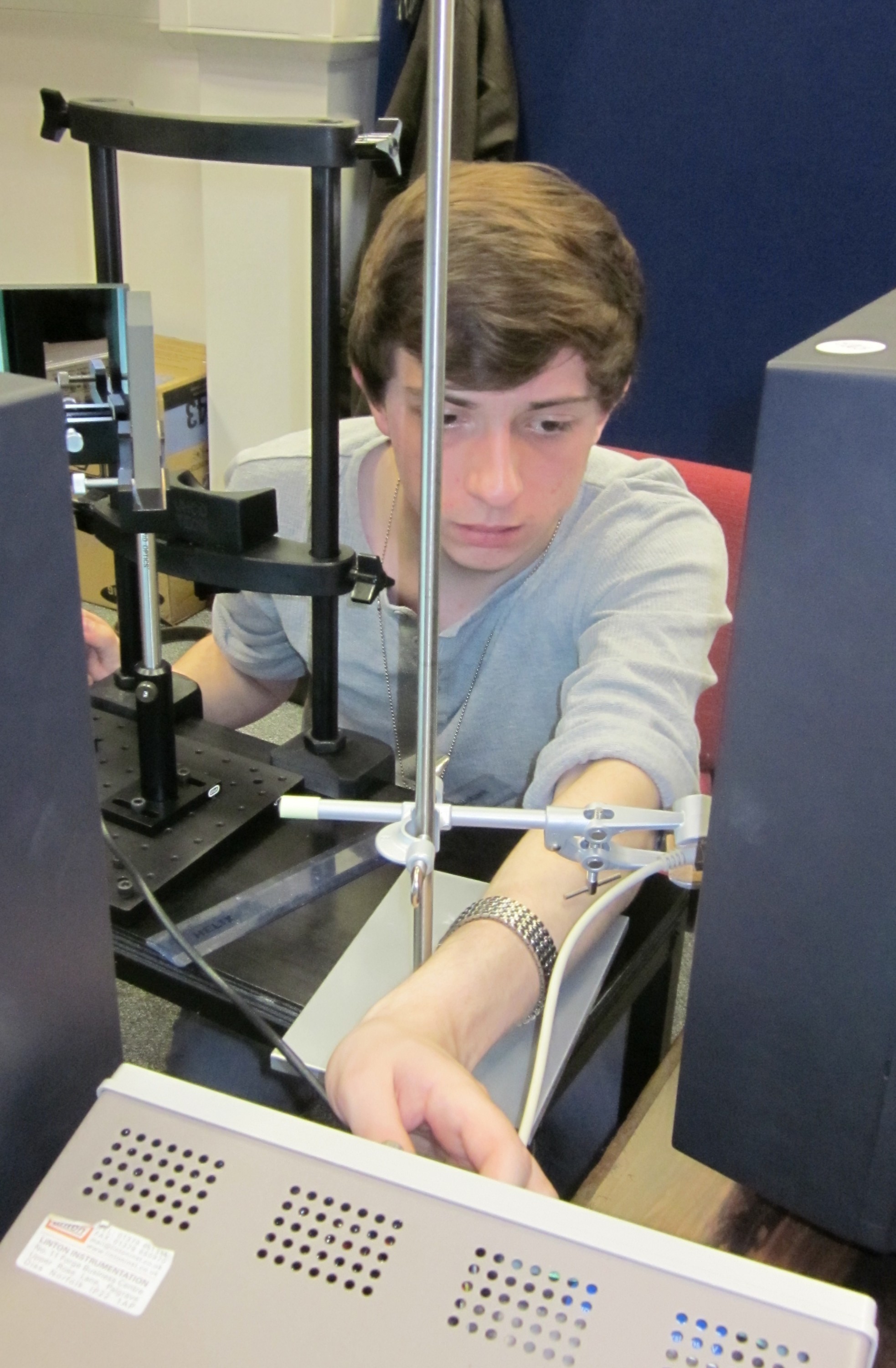

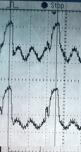

Jenny: This photo shows undergraduate student Steven Errington checking the calibration of a mirror stereoscope. This is one of the oldest forms of 3D display, and uses mirrors to present different images to the two eyes. The observer will sit with their head in the headrest in front of Steven, and the two mirrors in front of them will ensure that their left eye views a computer monitor to their left, while their right eye views a different monitor on their right. It's essential the two monitors are aligned both in space and time - that is, that they update their images at exactly the same time. Steven has clamped a photodiode in front of each monitor (that's the white cable running down by his hand) and fed their inputs into an oscilloscope. Photodiodes output a voltage which depends on the light falling onto them, so each new image presented on the computer monitor shows up as a "blip" on the oscilloscope. Steven can then check that the blips are occurring at exactly the same time, to sub-millisecond precision.

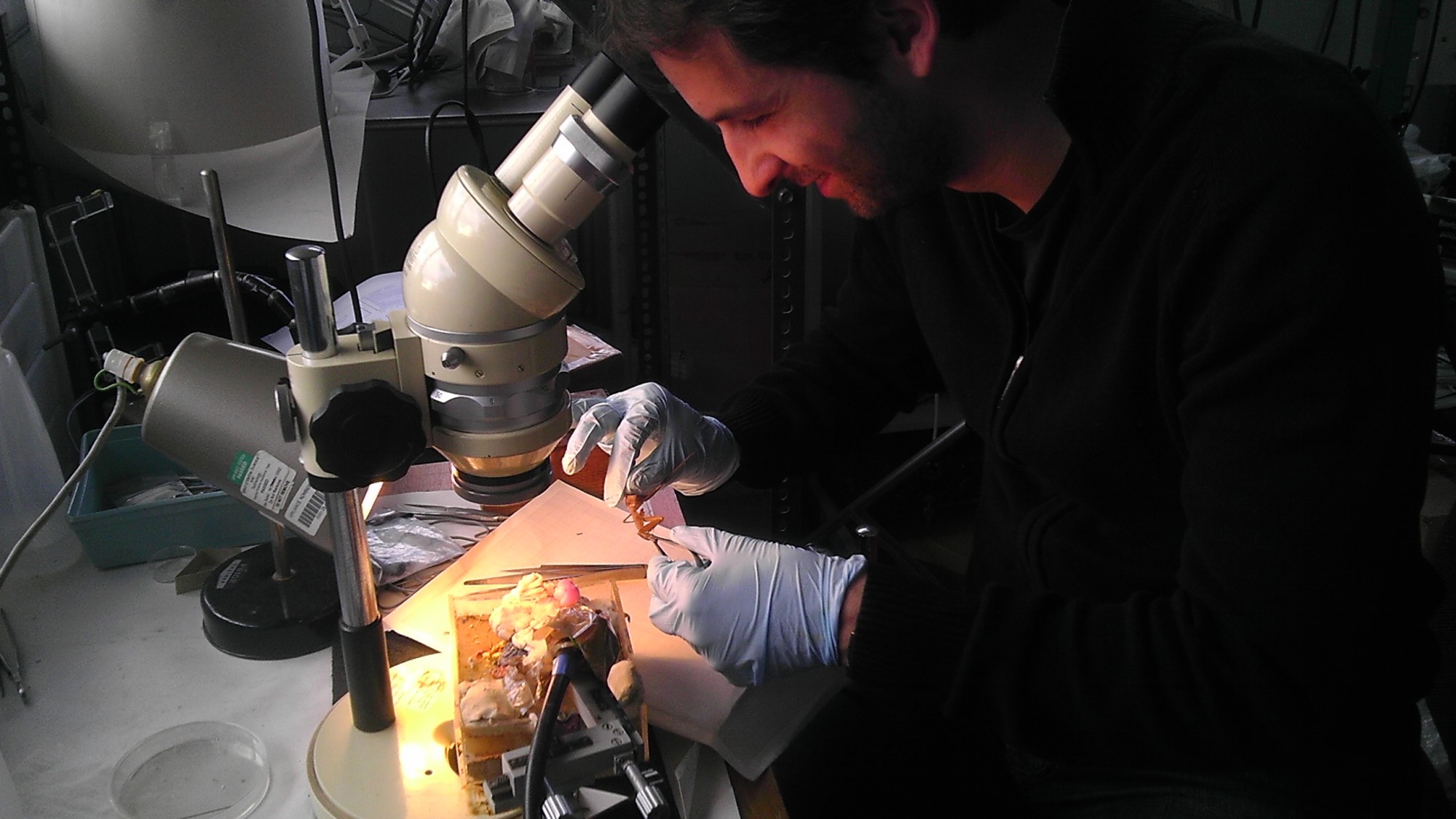

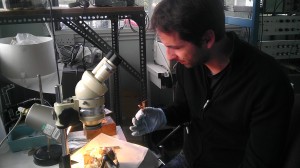

Jenny: Dr Ronny Rosner, an expert in insect visual neurophysiology, prepares to dissect a praying mantis in Dr Claire Rind’s insect lab at Newcastle University. This is part of a major new project in my lab, “Man, Mantis and Machine”, funded by the Leverhulme Trust. The praying mantis is the only non-vertebrate known to have a form of 3D vision, which it uses to help it strike at prey. We want to understand the neuronal circuits in its brain which help it do this, in order to compare them to similar circuits in human beings and other animals, and to 3D vision algorithms in computers and robots. Ronny will be joining my lab next year to bring his expertise to bear on these questions.

Paul: This experiment is designed to test orientation cues with stereo 3D (S3D) displays. The subject is sat behind a curtain with a square cut out of it, and can’t see that the television is in fact twisted through an angle, and therefore not perpendicular. However because of the display being S3D the subject cannot distinguish this change in orientation and assumes that the screen is frontoparallel (perpendicular). This means that when we show the stimulus (in this experiment a pair of rotating cubes) the stimulus look warped unless they are projected for the angle the subject is sat at (orthostereo), in contrast to when the curtain is removed and the television can be seen to be rotated, at which point the subjects brain corrects for not being perpendicular and sees the orthostereo cube (rendered for the angle) as warped, and the perpendicular cube as correctly projected.

Jenny: Three local sixth-formers did a summer project in my lab, funded by the Nuffield Foundation. As part of this, they collected experimental data from members of the public in Newcastle's Centre for Life. Here, they are getting their equipment set up ready for another busy data running experiments. Their work ended up being published in two scientific papers, on which the young people were authors, both in the journal i-Perception.

And I’m delighted to announce that

And I’m delighted to announce that